RFP Agents: How AI Transforms Proposals

January 26, 2026

By

Evie Secilmis

If you've spent any time in presales or proposal management lately, you've probably heard someone throw around the term 'RFP agent.' It sounds futuristic—like you're going to have a little AI assistant sitting next to you, autonomously cranking out proposals while you focus on the strategic stuff. The reality is both less dramatic and more useful than that vision suggests.

RFP agents represent a genuine shift in how teams can handle proposals, security questionnaires, and all those other document requests that eat up so much time. But there's a lot of hype mixed in with the substance. Let's cut through it and talk about what these tools actually do, where they shine, and where they still fall short.

What Is an RFP Agent, Really?

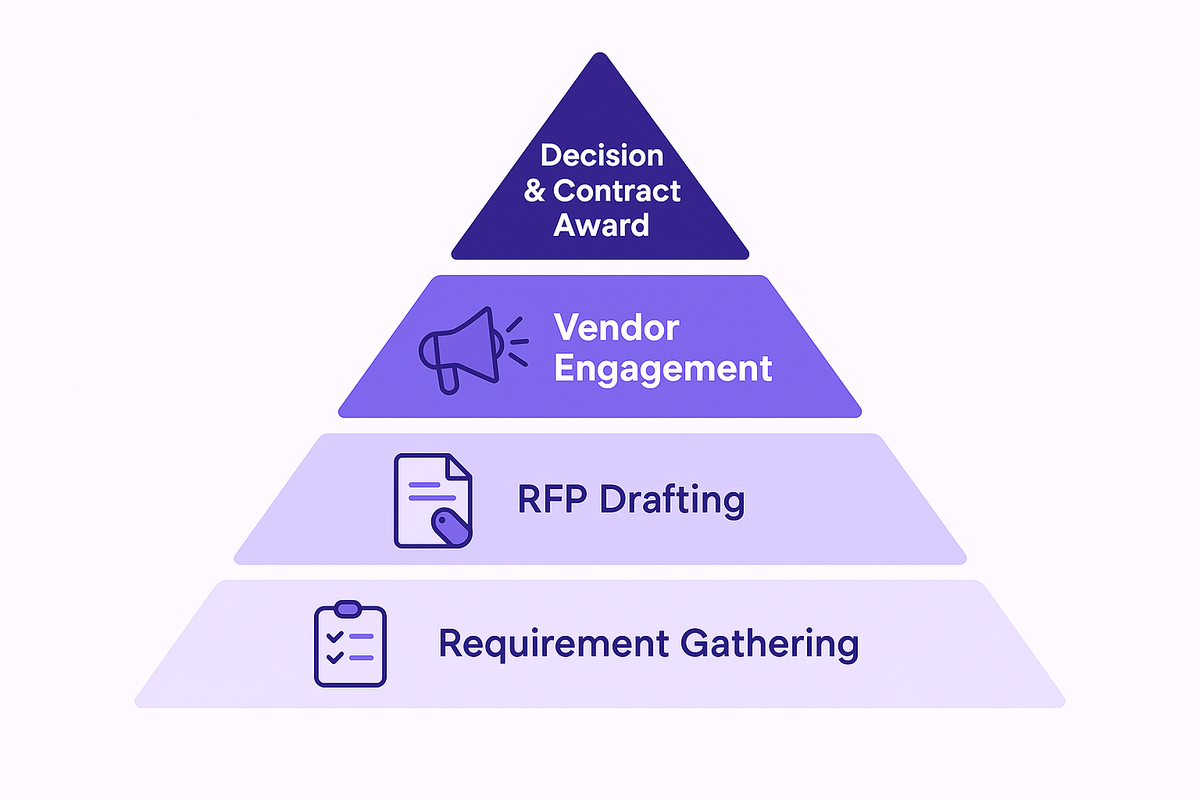

The term 'agent' gets used loosely in AI marketing, so let's be specific. An RFP agent is software that can take actions toward completing a proposal with minimal human direction. It's not just a search tool that finds relevant content when you ask—it's a system that can read a document, identify what needs to be answered, draft responses, and flag what needs human attention.

Think of the difference between a calculator and a bookkeeper. A calculator does math when you tell it to. A bookkeeper looks at your receipts, figures out what needs to be categorized, does the categorization, and asks you about the weird stuff. An RFP agent is trying to be the bookkeeper—handling the routine work autonomously so you can focus on judgment calls.

The 'agent' part matters because it implies a degree of autonomy. You upload an RFP, and the system doesn't just sit there waiting for instructions. It parses the document, extracts questions, matches them against your knowledge base, generates draft answers, assigns confidence scores, and presents you with a first pass that's ready for review. That's meaningfully different from tools that require you to drive every step.

Related: Learn more about AI in proposal management

How RFP Agents Actually Work

Under the hood, modern RFP agents combine several AI technologies that have matured significantly in the past few years. Understanding the basics helps you evaluate whether a particular tool is actually sophisticated or just marketing itself well.

First, there's document understanding. The agent needs to look at an RFP—which might be a Word doc, a PDF, an Excel spreadsheet, or a web form—and figure out what's being asked. This sounds simple until you realize how many ways people format these things. Questions buried in tables, requirements spread across multiple sections, attachments that need to be cross-referenced. Good agents handle messy real-world documents, not just clean examples.

Then there's retrieval. When the agent identifies a question about, say, your data encryption practices, it needs to find the right content in your knowledge base. This is where semantic understanding matters. The question might ask about 'protecting data at rest' while your documentation talks about 'encryption for stored information.' A keyword search fails here; semantic matching succeeds.

Finally, there's generation. The agent takes retrieved content and synthesizes it into a coherent response that actually answers the question asked. This is where large language models come in, but also where things can go wrong if the system isn't carefully designed. More on that in a minute.

The Security Questionnaire Problem

If RFP agents have a killer app, it's security questionnaires. These things are brutal—hundreds of questions asking about your encryption, access controls, incident response, compliance certifications, and on and on. And here's the kicker: most of them ask the same questions in slightly different ways.

A typical enterprise SaaS company might handle 50-100 security questionnaires a year. Each one takes hours or days to complete manually. Multiply that out, and you've got someone's entire job just answering the same questions over and over, trying to maintain consistency while not going insane from boredom.

AI software for auto-populating security reviews addresses this directly. Map your security documentation to common frameworks once—SIG, CAIQ, SOC 2 controls, whatever you encounter—and the system can auto-fill most questions on new questionnaires. Teams using these tools report 70-80% of questions answered automatically, with humans reviewing and handling the edge cases.

The consistency benefit is huge too. When different people answer similar questions differently across questionnaires, it raises red flags for evaluators. Automated responses from a central knowledge base eliminate that variation. Every answer traces back to the same approved source.

→ See how Iris handles security questionnaires

Where Agents Excel

Let's be honest about what these tools do well, because the benefits are real even if the marketing sometimes oversells them.

Speed on Routine Work

The biggest win is time savings on repetitive content. If you've answered 'describe your disaster recovery process' fifty times, there's no reason a human should write that answer a fifty-first time. Agents handle this kind of routine content quickly and consistently, freeing your team to focus on questions that actually require thought.

First Draft Quality

A good agent produces drafts that are genuinely usable, not just starting points that need complete rewrites. You're editing and refining, not creating from scratch. That's a different kind of work—faster and less mentally taxing. Teams consistently report that reviewing AI-generated drafts feels easier than staring at blank pages.

Consistency Across Responses

When you're submitting proposals to multiple prospects simultaneously, maintaining consistent messaging matters. Agents pull from the same knowledge base every time, so your positioning, technical descriptions, and compliance statements stay aligned. No more wondering if the response you sent last week contradicts what you're saying today.

Knowledge Base Hygiene

Here's a benefit people don't always expect: agents surface problems with your content. When the system can't find a good answer to a common question, that's a signal you have a gap. When it finds contradictory information, that's a signal you have a consistency problem. The process of using an agent often improves the underlying knowledge base.

Related: Explore RFP automation use cases

Where Agents Still Struggle

Now for the honest part. These tools aren't magic, and pretending they are sets you up for disappointment.

Novel Questions

Agents are only as good as your knowledge base. When a prospect asks something you've genuinely never addressed before—a new compliance framework, an unusual technical requirement, a creative question about your roadmap—the agent can't help much. It might attempt an answer, but you'll need humans to actually think through the response.

Nuance and Customization

Boilerplate answers are fine for commodity questions, but the responses that win deals often require customization. Understanding a prospect's specific situation, referencing their industry or challenges, connecting your capabilities to their stated goals—this requires human judgment. Agents can get you 80% of the way there; the last 20% is where deals are won or lost.

Hallucination Risk

This is the big one. General-purpose AI models sometimes generate confident-sounding content that isn't actually grounded in your documentation. In an RFP context, this is dangerous—you might commit to capabilities you don't have or make statements that create legal exposure. Good RFP agents constrain their generation to your approved content, but not all tools do this well. Ask hard questions about how a vendor prevents hallucinations before you trust their output.

Complex Document Formats

Some RFPs arrive as nightmares of nested tables, merged cells, embedded objects, and formatting that makes no logical sense. Agents have gotten much better at handling document complexity, but edge cases still exist. If your RFPs frequently arrive in unusual formats, test specifically with those formats before committing to a tool.

Evaluating RFP Agent Tools

If you're shopping for an RFP agent, here's what to actually look at:

Test With Your Real Content

Demos with vendor-prepared examples prove nothing. Insist on uploading your actual RFPs and your actual knowledge base content. See how the tool performs with your messy reality, not a curated showcase. Any vendor confident in their product will agree to this.

Understand the AI Architecture

Ask whether generated responses are constrained to your content or can draw from general knowledge. Ask how confidence scores are calculated. Ask what happens when the system isn't sure. The answers tell you whether this is a serious tool or a thin wrapper around a general-purpose language model.

Look at the Human-in-the-Loop Experience

The agent handles first drafts; humans handle refinement and approval. How smooth is that handoff? Can you easily see what content the agent used? Can you edit efficiently? Can you route specific questions to specific experts? The collaboration workflow matters as much as the AI capabilities.

Consider the Knowledge Base Investment

These tools require good content to produce good results. How much work is required to get your existing documentation into usable shape? How does the system handle updates? Who owns content maintenance? The ongoing care and feeding of your knowledge base is a real cost that should factor into your evaluation.

→ See how Iris approaches AI-powered proposals

Frequently Asked Questions

Will RFP agents replace proposal writers?

No—they change the job, not eliminate it. Humans shift from writing routine content to reviewing AI drafts, handling complex questions, adding strategic customization, and managing the overall narrative. The best proposal teams will use agents to handle volume while focusing their expertise where it matters most. Think of it as augmentation, not replacement.

How accurate are AI-generated responses?

It depends entirely on the tool and your content quality. Well-designed systems constrained to your approved content achieve 85-95% accuracy on factual questions. Systems that can hallucinate or draw from general knowledge are less predictable. Always review AI-generated content before submission—the goal is faster review, not blind trust.

What's the implementation timeline?

Basic setup takes 2-4 weeks for most teams—uploading content, configuring the system, training users. Full optimization with refined content, established workflows, and team adoption typically takes 2-3 months. You'll see value early, but it compounds as your knowledge base matures and your team gets comfortable with the tools.

How do we handle sensitive content?

Evaluate vendor security carefully. Look for SOC 2 certification, encryption standards, and clear data handling policies. Understand whether your content trains shared models or remains isolated. For highly sensitive content, some teams maintain separate knowledge bases with different access controls. The right approach depends on your risk tolerance and compliance requirements.

What ROI should we expect?

Most teams see 3-5x ROI in the first year through time savings alone. Calculate your current hours per RFP, multiply by volume, apply realistic efficiency gains (50-70% is typical), and compare to tool cost. Additional value comes from handling more opportunities and improving win rates through better response quality—but start with the time savings math; it's usually compelling on its own.

The Bottom Line

RFP agents are real tools solving real problems. They're not going to autonomously win deals for you, but they can dramatically reduce the time your team spends on routine proposal work. For security questionnaires specifically, the impact can be transformative—turning a multi-day slog into a few hours of review.

The key is appropriate expectations. These tools excel at leveraging your existing knowledge to handle repetitive questions quickly and consistently. They struggle with novel situations, nuanced customization, and anything that requires genuine judgment. Use them for what they're good at, and keep humans focused on what humans are good at.

The teams that adopt these tools thoughtfully—investing in their knowledge base, designing good review workflows, and maintaining realistic expectations—are gaining meaningful competitive advantages. The teams waiting for perfect autonomous AI are going to be waiting a while. The technology is good enough now to deliver real value. The question is whether you're ready to put it to work.

Share this post

Link copied!