Enterprise AI You Can Actually Trust

Every response grounded in your verified content. Every decision traceable to its source. Zero data leakage, complete governance control.

Three Pillars of Responsible AI

Our commitment to building AI that serves teams without compromising trust or control.

Smart flagging

flags uncertain responses

100% traceable

to approved sources

Zero leakage

enterprise isolation

Real-time

audit trails

See These Principles in Action

Every principle is backed by real functionality you can see, control, and audit. Here's how transparency, human oversight, and governance work in practice.

Smart Flagging for Focused Reviews

Iris flags responses it's uncertain about, so your team can focus their time where it matters most. No more guessing, over-editing, or letting risky content slip through.

See what needs review — Low-confidence answers are automatically flagged

Skip what's solid — High-confidence responses are clearly marked

Build trust in AI — Know what the model is sure of (and what it isn't)

Speed up approvals — Focus SME time on what actually needs a second look

"Smart flagging helps teams focus where it matters most — no more guessing or over-editing."

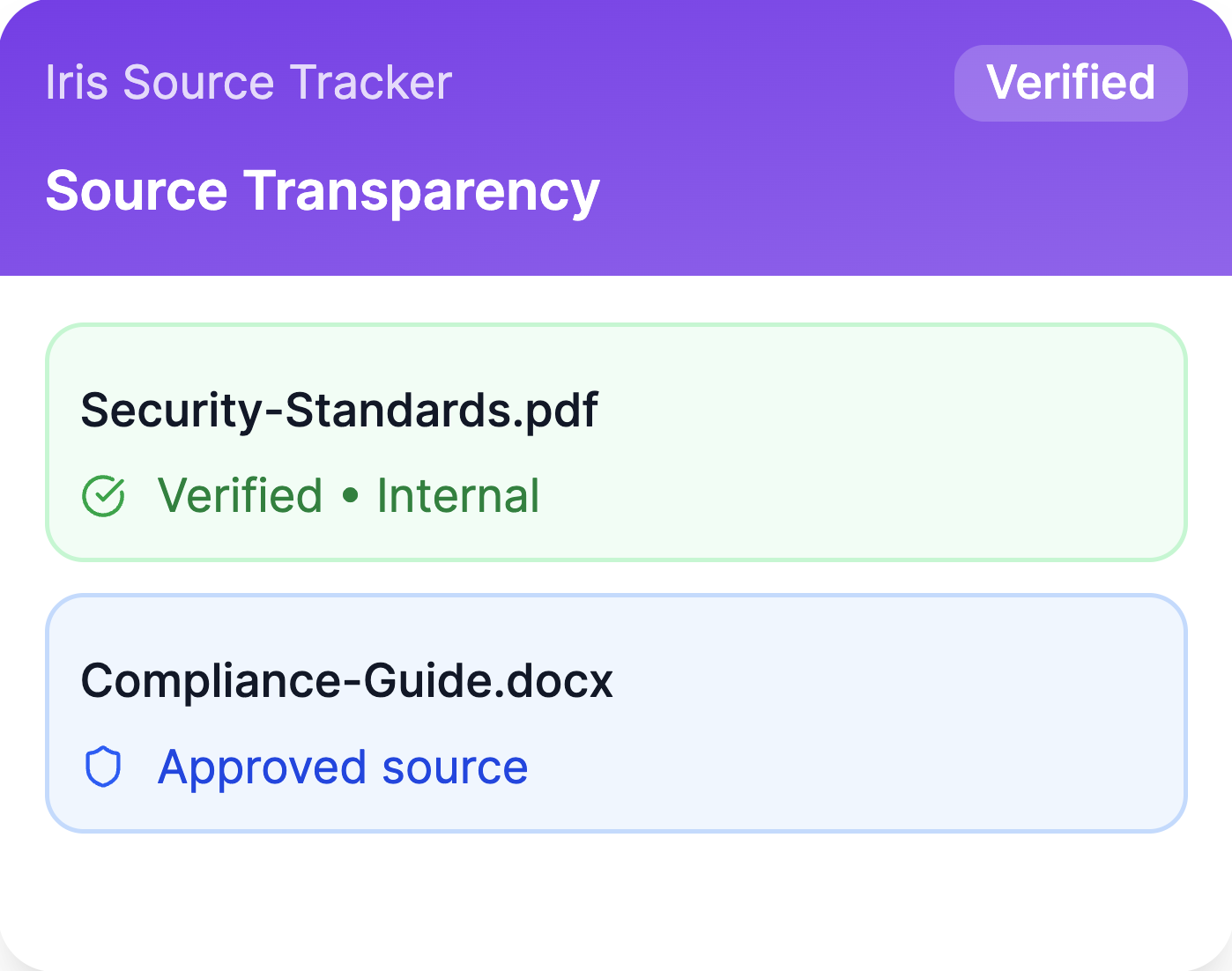

Zero Data Leakage. Total Transparency.

Your data stays secure while maintaining full visibility into sources and decisions.

No LLMs training on your data — All information stays in your environment, fully secure.

Source transparency — See which docs power each response, when verified

Traceable outputs — Every answer linked to exact knowledge source

Content control — Only approved, permissioned documentation

"100% of Iris responses are grounded in verified, internal sources—never public data."

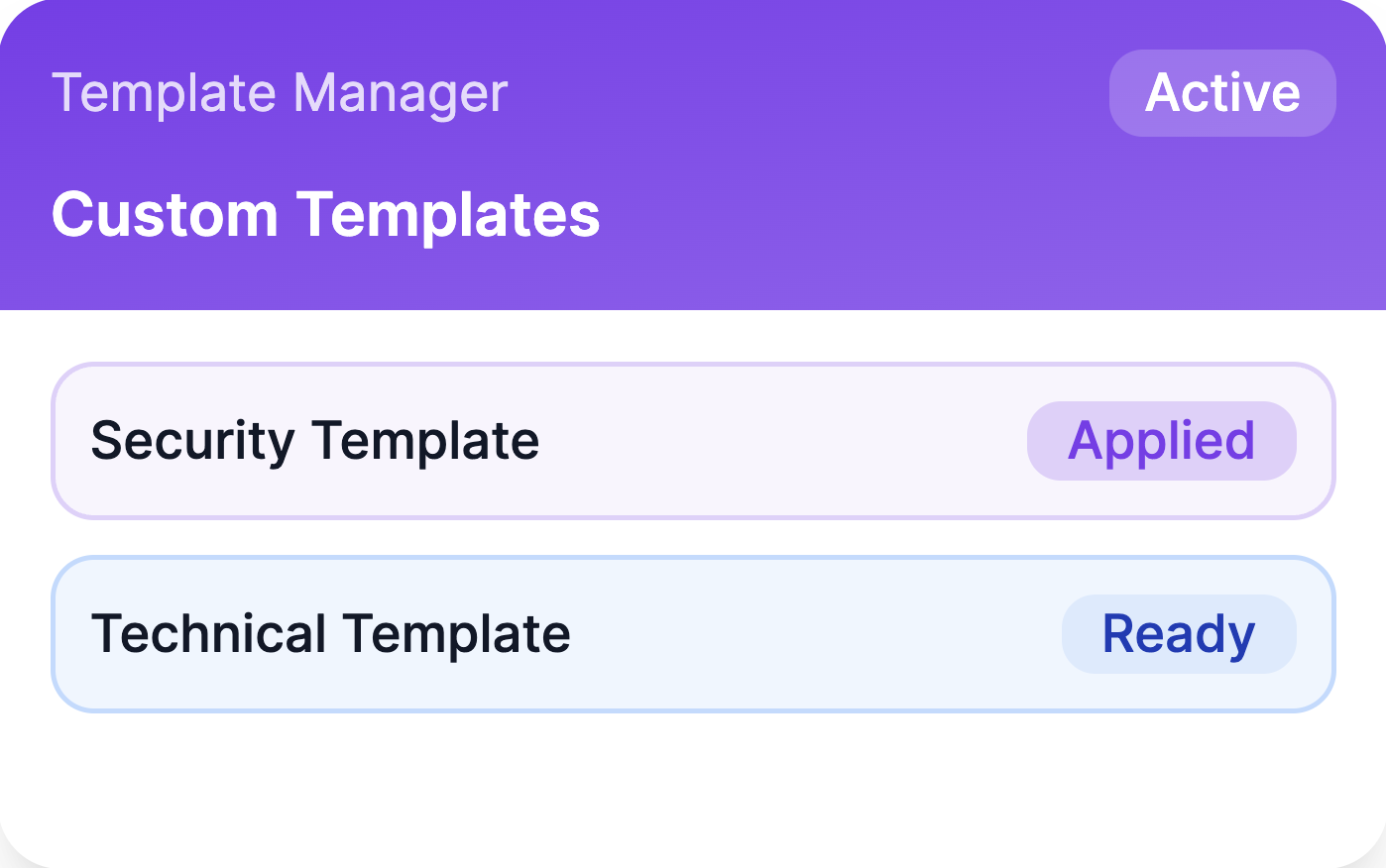

Custom Instructions and Templates

Maintain tone, structure, and compliance standards across every response — without rewriting from scratch.

Brand consistency — Automatic tone and style enforcement

Compliance templates — Pre-built structures for regulatory requirements

Custom workflows — Tailored approval processes for your team

Version control — Track changes and maintain document history

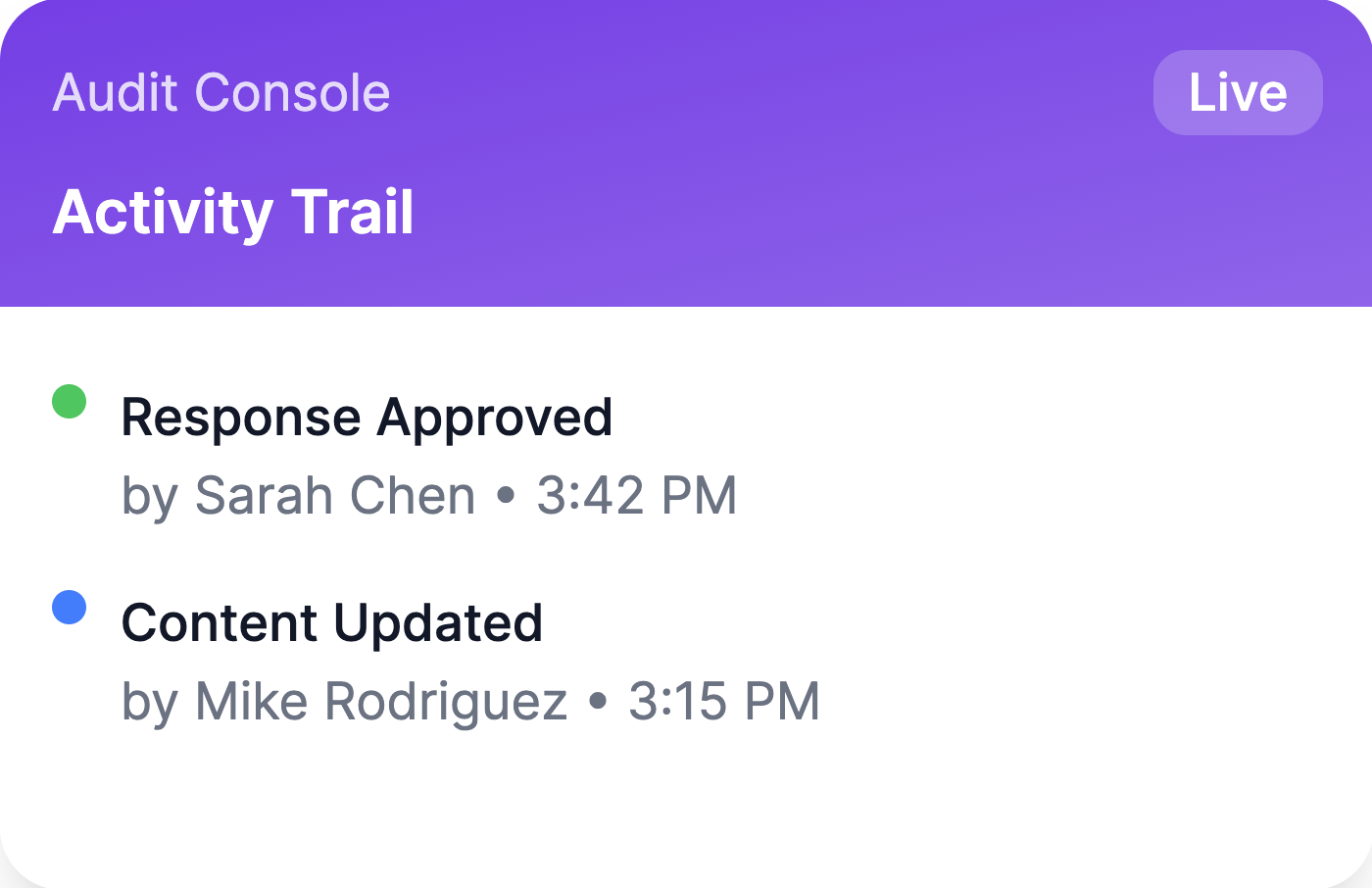

Full Revision History

All changes are tracked, versioned, and logged. You always know who edited what and when.

Complete audit trail — Every action is logged and timestamped

User tracking — See exactly who made each change

Export capabilities — Generate compliance reports instantly

Real-time monitoring — Live dashboard of all system activity

Enterprise-Grade Security You Can Trust

Built with enterprise security at its core. Your data never leaves your environment.

0%

Data Leakage

100%

Compliant

24/7

Monitoring

360°

Audit Trail

Our Responsible AI Commitments

"At Iris, responsible AI isn't just a feature. It's a mindset. We audit model behavior, listen to user feedback, and evolve with the latest research and enterprise needs. Our goal is to give teams the confidence to adopt AI knowing they have transparency, governance, and human oversight at every step."

Ben Hills, CEO, Iris